Speech Recognition consists of 3 main models:

- Acoustic Model : acoustic properties for each senone (HMM)

- Phonetic Dictionary: a mapping from words to phones

- Language Model: to restrict word search

- What is the next words?

- In spite __

In our application, we are working on Speech-to-Text Auto Captioning for Speeches in NCSA (The National Center for Supercomputing Applications ) Talks.

Thus, most of the talks have more related to science fields. The open source speech recognition CMUSphinx has a general models.

In this article, I will present how can we create domain-specific phonetics model.

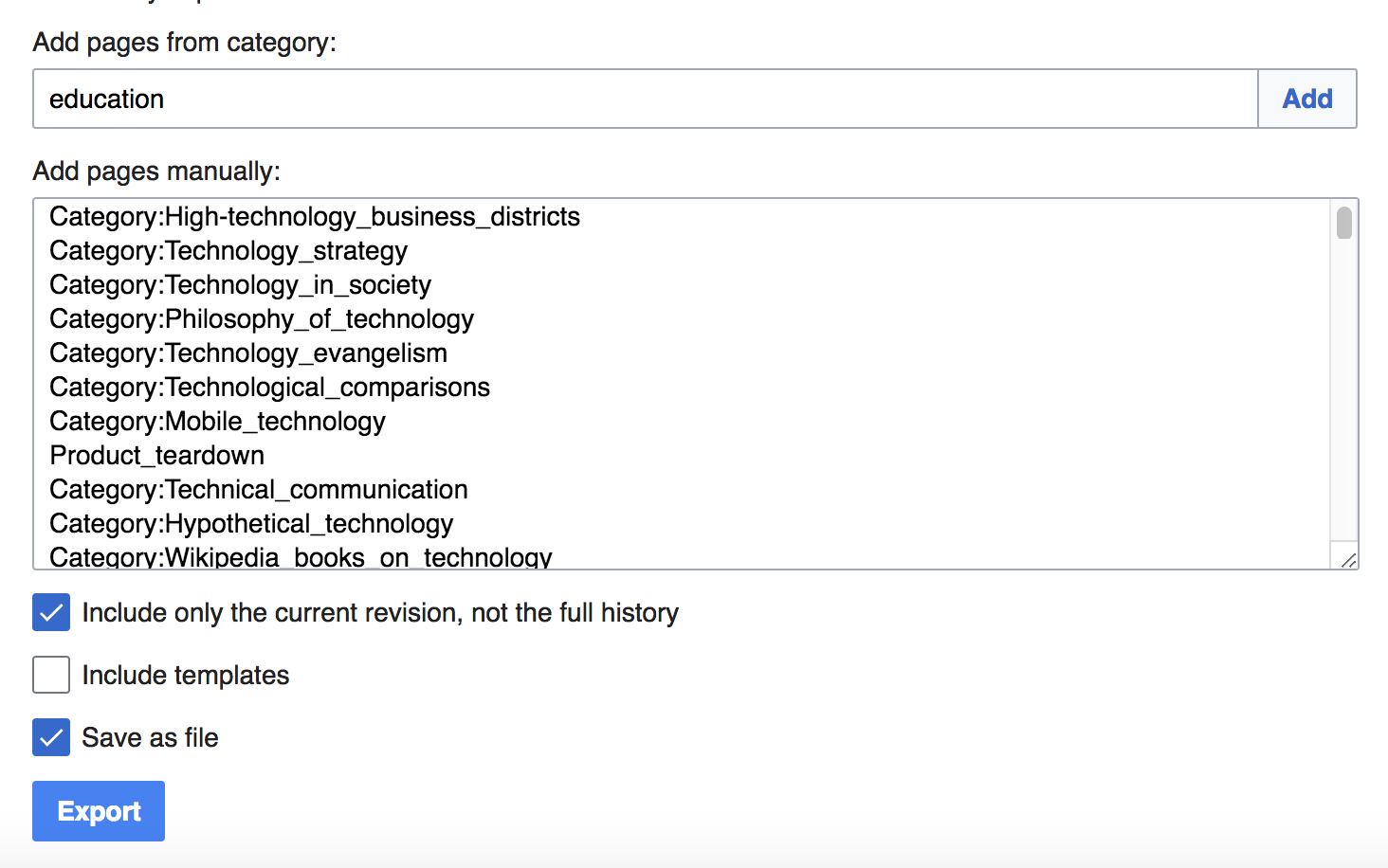

Step 1: Get the Wikipedia Database

Wikipedia offers free copies of all available content to interested users.

Wikipedia:Database download lists all the possible tools, databases, and answers to the frequent asked questions.

To download a subset of the database in XML format, such as a specific category or a list of article, we can use Special:Export. Usage of which is described at

Wikipedia:Help:Export.

Wikipedia:Portal:Contents/Categories shows the categories of Wikipedia's content, such as "Culture and the arts", "Geography and places","Human activities" and etc.

The category I chose are: technology, science, computer, environment, policy, education.

I added each category one by one and got the following namespaces.

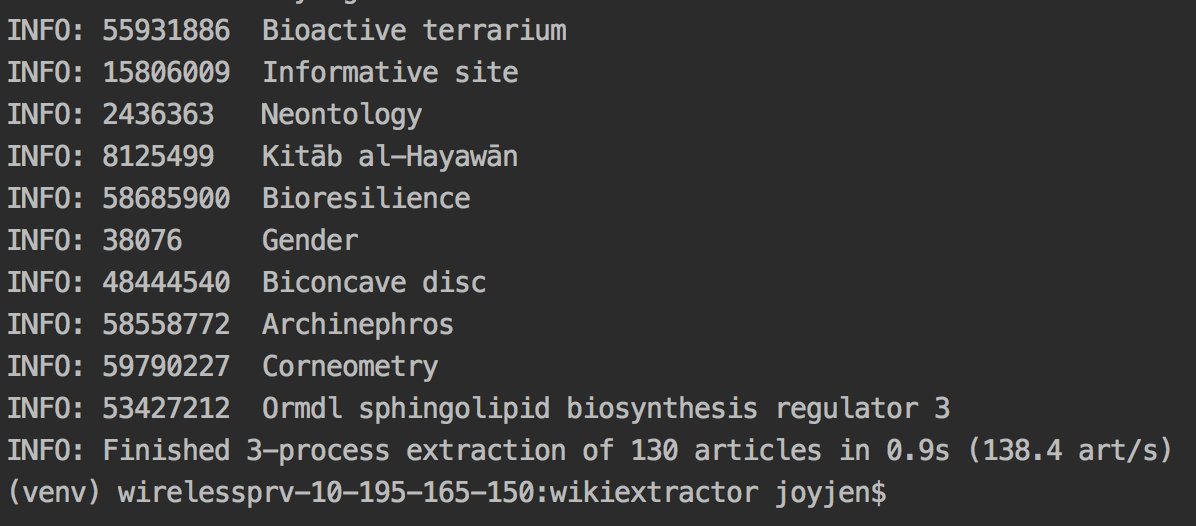

Step 2: convert the dump file to sentences

WikiExtractor is a Python script that extracts and cleans text from a Wikipedia database dump.

The tool is written in Python and requires Python 2.7 or Python 3.3+ but no additional library.

Installation

git clone https://github.com/attardi/wikiextractor.git

(sudo) python setup.py install

Execution

cd wikiextractor/

python3 WikiExtractor.py -o ../Output.txt ../Wikipedia-20190222003910.xml

System Output:

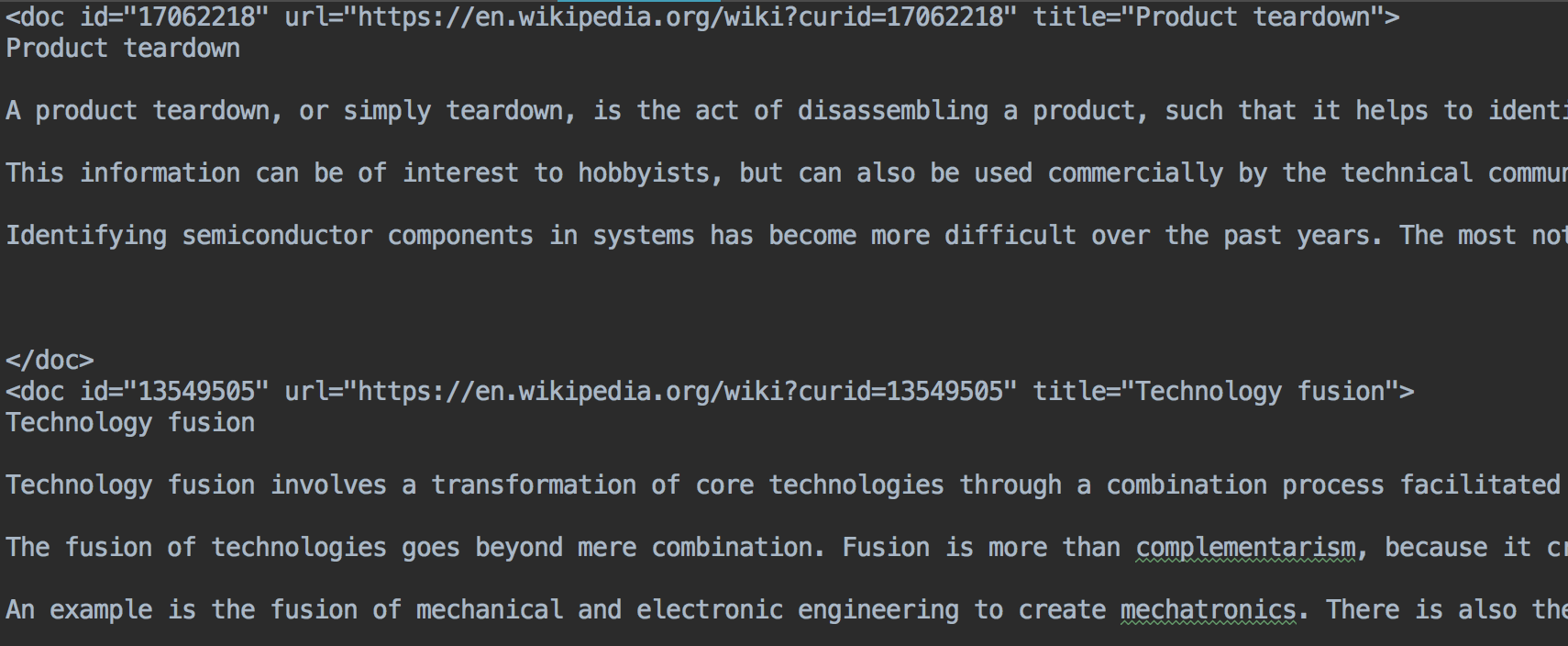

Output Result:

To further clean the text and format it to our desired form, run the following bash command:

cat Output.txt | sed 's/<.*>//' | tr -d '\.\,&' | tr ' ' '\n' | sed '/^[[:space:]]*$/d' | tr [[:upper:]] [[:lower:]] > wiki_01.txt

- sed 's/<.*>//' delete everything inside "<>"

- tr -d '.\,&' and sed '/1*$/d' delete empty string (I forgot why I repeat twice)

- tr ' ' '\n' translates space to newline

- tr [[:upper:]] [[:lower:]] translate upper case letter to lower case

More updates...

The article is inspired by Creating a text corpus from Wikipedia

- [:space:] [return]